This post is based on the tests I did to see if storage spaces in Windows 2012R2 can serve as a platform for our Fast Track environments.

When Microsoft developed the Fast Track Data Warehouse architecture, which was at first very limited in hardware choice and for version SQL Server 2012 became a reference guide, Storage Spaces as a functionality in Windows did not exist.That has changed with the release of Windows 2012 and later on with version 2012R2 and soon 2016.

Why is Storage Spaces as a storage technology so interesting for SQL Server?

Anyone who is a pro in SQL Server knows that parallelism - adding more disks - can greatly improve performance. Adding an hard disk for the tempdb and another one for the LOG files will do the job if the hard disks perform sufficiently (that might be another issue!).

To my opinion (and also to others) SQL Server does not do a great job in using the available hardware. Before and after the installation it is mandatory to tune a SQL Server environment. Already the fact that just formatting the disks used for the data and the logs with 64KB blocks in NTFS can greatly improve performance. After the installation it is also required to set the "Perform Maintenance Tasks" parameter in the Local Security Manager to the account that is running the SQL Server service and also set the "Lock pages in Memory" parameter to prevent the operating system to interfere with the memory management of SQL Server.

And then there is the number of tempdb files and the extends you give them. This is finally changed in the installation of SQL Server 2016 where the install propose the number of tempdb files depending on the number of processors in the system. And the extends for the LOG and DATA files for the tempdb are all set to 64MB and no longer to the 10% for the DATA files and the 1 MB for the LOG files. Finally!

When to use Storage Spaces

When looking closer at Storage Spaces it is a highly parallelized storage system. Of cause in order to get the cookies you have to have the material. When your server has 1 controller and 4 disks you maybe can obtain a performance gain but I think a hardware RAID solution will be better.

However if you have a storage array with 2 controllers and a HBA with 2 connections or a JBOD with 2 controllers and a SAS controller with two connections Storage Spaces might be the way to go for you. Why?

Setting up your storage the traditional way (without Storage Spaces)

When you follow the traditional way, meaning creating RAID1 (mirror) or RAID1 + 0 (striped mirror) drives for more performance you end up having drives which has one or two times the size of the actual disks.

Anyone who starts by saying: "and RAID5 then?" should really question him or herself if he or she really knows how to build high performance SQL Server environments.

Then you tell the HBA controller that that volume will be used by that port and you balance the volumes over the 2 ports and you are ready to go. With a SAS controller you can not do that.

Performance in this RAID1 or RAID10 is also limited to roughly one or twice the performance of a single hard disk, in general 200 MB/s to 400 MB/s reading 512KB blocks and a latency that is not too great (that was an issue in the Microsoft SQL Server Fast Track architecture).

So here you are with several relative small volumes that when the server uses only one volume to collect the data is rather slow. The high performance you wanted can only be obtained when all the volumes are used in parallel. And that might not always be the case...

Setting up your storage using Storage Spaces

As I said before Storage Spaces profits highly from parallelism. So if you need to have 2 or more connections to the same disk and let the controllers manage all disks. If you don't do that and still try to manage access paths on HBA controllers you will not get the same performance as you would get if all HBA controllers can handle all disks. Because parallelism is all about that.

We did tests using existing SQL Server 2014 on Microsoft SQL Server Fast Track reference architectures and the more we limited the access paths of the HBA's - one HBA per disk array or even several disks per disk array - performance went down with several GB/s. We crippled actually the algorithm in Storage Spaces.

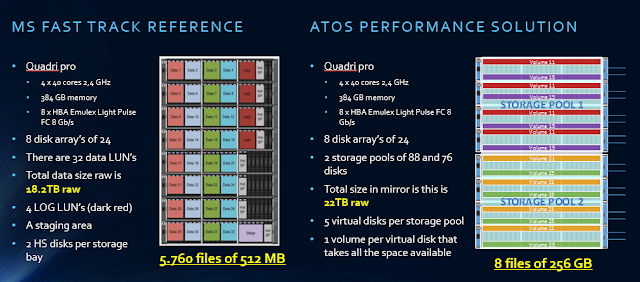

What we saw was that with Storage Spaces with had some loss on the GB/s using the standard SQLIO tests for a Fast Track but when we did the test with SQL Server 2014 reducing the 32 data LUN's to 8 data volumes and one dedicated tempdb and one for the LOG's we ended up in increasing the performance in SQL Server 2014 with more than 50% on the same hardware.

For the tests we used a 8 TB table with a clustered index that we compressed to almost 2 TB. We ran the same queries using between 300 million and 40 billion rows!

New architecture for Data Warehousing: ATOS Performance solutions

My tests on existing Microsoft Fast Track reference architectures provided a more than 50% performance improvement using the same hardware and can handle way more simultaneous users.

My newly developed ATOS Performance Solutions based on SQL Server 2014 with Windows 2012R2 Storage Spaces show that we are able serve at least a 100 users simultaneously with a good query performance (from 12s on a single user up to 90s for 100 simultaneous users).

How did we do it?

Like I explained in my previous post we created one storage space for all the hard disks (80) and one for all the SSD disks (4 per JBOD) in the 4 JBOD's (4). I created 8 virtual disks on the HDD storage pool using all the available disks and 2 virtual disks on the SSD storage pool.

All virtual disks were created with 64 KB interleave size and the volumes formatted with the same 64KB block size. The data files were placed on the 8 HDD volumes and the tempdb files (12) and the LOG files were placed on a separate SSD volume.

And the performance?

What we saw was when we used only one volume for sequential reads of 512KB we hit already 4.3 GB/s of I/O which is impossible to get when using a traditional RAID10 solution. Using more and more volumes we got up to 8.7 GB/s using only 4 JBOD's.

With a traditional Fast Track reference solution we obtained 9.6 GB/s with 8 disk arrays and 32 LUN's or volumes.

Loading the data was also way easier: we used only 4 parallel loading tasks in SQL Server Integration Services and obtained an effective data load rate of 700 MB/hour. We had actually achieved more than 1 TB/hour but we lost 300 MB/hour on the fact that since SQL Server 2012 data is first loaded in the tempdb before it is transferred to the actual table. This was all visible in the performance tools we used.

It is all about latency!

The most important about this new architecture with Storage Spaces is that the latency was drastically reduced. A Fast Track reference architecture will provide the results with a 24ms+ latency and our ATOS Performance Solutions based on SQL Server 2014 was capable to return the data much faster with less processors (think about the license costs reduction!) and therefore could serve way more users (easily more than a 100 simultaneously in fact).

When using JBOD's it is advised to use only 2 data volumes per JBOD but always create one storage volume over all the JBOD's you have available. The SSD volumes with a small number of SSD disks will always be two for tempdb and LOG files.

Conclusion

Positive side effects of using our ATOS SQL Server 2014 Performance Solution on Storage Spaces instead of the Microsoft Fast Track Reference architecture:

When Microsoft developed the Fast Track Data Warehouse architecture, which was at first very limited in hardware choice and for version SQL Server 2012 became a reference guide, Storage Spaces as a functionality in Windows did not exist.That has changed with the release of Windows 2012 and later on with version 2012R2 and soon 2016.

Why is Storage Spaces as a storage technology so interesting for SQL Server?

Anyone who is a pro in SQL Server knows that parallelism - adding more disks - can greatly improve performance. Adding an hard disk for the tempdb and another one for the LOG files will do the job if the hard disks perform sufficiently (that might be another issue!).

To my opinion (and also to others) SQL Server does not do a great job in using the available hardware. Before and after the installation it is mandatory to tune a SQL Server environment. Already the fact that just formatting the disks used for the data and the logs with 64KB blocks in NTFS can greatly improve performance. After the installation it is also required to set the "Perform Maintenance Tasks" parameter in the Local Security Manager to the account that is running the SQL Server service and also set the "Lock pages in Memory" parameter to prevent the operating system to interfere with the memory management of SQL Server.

And then there is the number of tempdb files and the extends you give them. This is finally changed in the installation of SQL Server 2016 where the install propose the number of tempdb files depending on the number of processors in the system. And the extends for the LOG and DATA files for the tempdb are all set to 64MB and no longer to the 10% for the DATA files and the 1 MB for the LOG files. Finally!

When to use Storage Spaces

When looking closer at Storage Spaces it is a highly parallelized storage system. Of cause in order to get the cookies you have to have the material. When your server has 1 controller and 4 disks you maybe can obtain a performance gain but I think a hardware RAID solution will be better.

However if you have a storage array with 2 controllers and a HBA with 2 connections or a JBOD with 2 controllers and a SAS controller with two connections Storage Spaces might be the way to go for you. Why?

Setting up your storage the traditional way (without Storage Spaces)

When you follow the traditional way, meaning creating RAID1 (mirror) or RAID1 + 0 (striped mirror) drives for more performance you end up having drives which has one or two times the size of the actual disks.

Anyone who starts by saying: "and RAID5 then?" should really question him or herself if he or she really knows how to build high performance SQL Server environments.

Then you tell the HBA controller that that volume will be used by that port and you balance the volumes over the 2 ports and you are ready to go. With a SAS controller you can not do that.

Performance in this RAID1 or RAID10 is also limited to roughly one or twice the performance of a single hard disk, in general 200 MB/s to 400 MB/s reading 512KB blocks and a latency that is not too great (that was an issue in the Microsoft SQL Server Fast Track architecture).

So here you are with several relative small volumes that when the server uses only one volume to collect the data is rather slow. The high performance you wanted can only be obtained when all the volumes are used in parallel. And that might not always be the case...

Setting up your storage using Storage Spaces

As I said before Storage Spaces profits highly from parallelism. So if you need to have 2 or more connections to the same disk and let the controllers manage all disks. If you don't do that and still try to manage access paths on HBA controllers you will not get the same performance as you would get if all HBA controllers can handle all disks. Because parallelism is all about that.

We did tests using existing SQL Server 2014 on Microsoft SQL Server Fast Track reference architectures and the more we limited the access paths of the HBA's - one HBA per disk array or even several disks per disk array - performance went down with several GB/s. We crippled actually the algorithm in Storage Spaces.

What we saw was that with Storage Spaces with had some loss on the GB/s using the standard SQLIO tests for a Fast Track but when we did the test with SQL Server 2014 reducing the 32 data LUN's to 8 data volumes and one dedicated tempdb and one for the LOG's we ended up in increasing the performance in SQL Server 2014 with more than 50% on the same hardware.

For the tests we used a 8 TB table with a clustered index that we compressed to almost 2 TB. We ran the same queries using between 300 million and 40 billion rows!

New architecture for Data Warehousing: ATOS Performance solutions

My tests on existing Microsoft Fast Track reference architectures provided a more than 50% performance improvement using the same hardware and can handle way more simultaneous users.

My newly developed ATOS Performance Solutions based on SQL Server 2014 with Windows 2012R2 Storage Spaces show that we are able serve at least a 100 users simultaneously with a good query performance (from 12s on a single user up to 90s for 100 simultaneous users).

How did we do it?

Like I explained in my previous post we created one storage space for all the hard disks (80) and one for all the SSD disks (4 per JBOD) in the 4 JBOD's (4). I created 8 virtual disks on the HDD storage pool using all the available disks and 2 virtual disks on the SSD storage pool.

All virtual disks were created with 64 KB interleave size and the volumes formatted with the same 64KB block size. The data files were placed on the 8 HDD volumes and the tempdb files (12) and the LOG files were placed on a separate SSD volume.

And the performance?

What we saw was when we used only one volume for sequential reads of 512KB we hit already 4.3 GB/s of I/O which is impossible to get when using a traditional RAID10 solution. Using more and more volumes we got up to 8.7 GB/s using only 4 JBOD's.

With a traditional Fast Track reference solution we obtained 9.6 GB/s with 8 disk arrays and 32 LUN's or volumes.

Loading the data was also way easier: we used only 4 parallel loading tasks in SQL Server Integration Services and obtained an effective data load rate of 700 MB/hour. We had actually achieved more than 1 TB/hour but we lost 300 MB/hour on the fact that since SQL Server 2012 data is first loaded in the tempdb before it is transferred to the actual table. This was all visible in the performance tools we used.

It is all about latency!

The most important about this new architecture with Storage Spaces is that the latency was drastically reduced. A Fast Track reference architecture will provide the results with a 24ms+ latency and our ATOS Performance Solutions based on SQL Server 2014 was capable to return the data much faster with less processors (think about the license costs reduction!) and therefore could serve way more users (easily more than a 100 simultaneously in fact).

When using JBOD's it is advised to use only 2 data volumes per JBOD but always create one storage volume over all the JBOD's you have available. The SSD volumes with a small number of SSD disks will always be two for tempdb and LOG files.

Conclusion

Positive side effects of using our ATOS SQL Server 2014 Performance Solution on Storage Spaces instead of the Microsoft Fast Track Reference architecture:

- way better performance with a smaller number of processors

- lower latency meaning a better query responds and a higher number of users that can be served at the same time

- reduce the number of files at least by 4x compared to using a similar Microsoft Fast Track reference architecture

- no more partitioning needed to obtain a high performance in queries so this reduces the number of files enormously (virtual partitioning for effective data loading)

- way easier to set-up and manage because all elements are build in Windows (no special storage solutions needed)

- with Windows 2016 storage replication is offered meaning that databases can be replicated on block level to an other data centre for the DRP solution

Comments

Post a Comment